High-Fidelity Testing on a Shared Staging Environment

Towards High Fidelity Testing

You know ‘Wi-Fi’ isn’t short for anything? It certainly sounds better than 'IEEE 802.11b Direct Sequence,’ and it sounds similar to ‘Hi-Fi,’ which was at the time of its coining a popular term for a home stereo, and does have a meaning: high fidelity, the idea that the sound coming from your sound system closely resembled the original intent of the artist who made the music. That same design goal guides ‘High Fidelity Testing.’

High Fidelity Testing is a testing approach that involves testing on an environment that closely resembles the production environment. In general this testing refers to ‘acceptance testing,’ 'integration testing,' or ‘end-to-end tests.’ This method of testing is essential in ensuring that the product is tested under realistic conditions and that any bugs or issues are detected before the product is released. The concept is a design goal without a precise definition, since all testing will be in some way ‘faked’ and even a canary deploy can have code that will only fail at full scale.

To achieve High Fidelity Testing, it is necessary to create an environment that is as close to the production environment as possible. This includes using

- data that resembles production

- live dependencies

- 3rd party integrations

- configuration that is closer to production

- traffic patterns that are closer to production

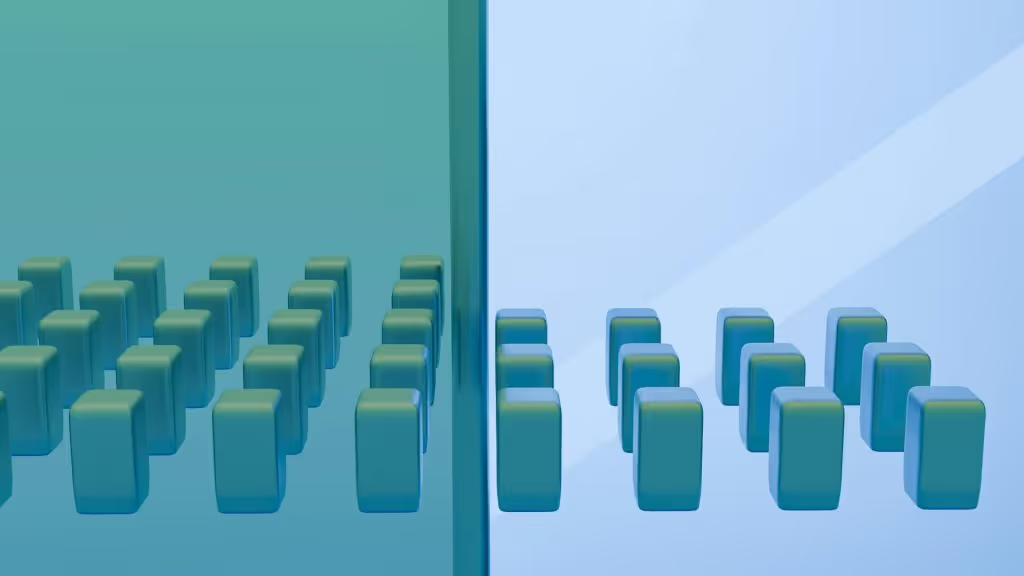

All of these values add significant complexity over a basic local replication environment, and while most large teams have a high-fidelity shared staging environment, relying on this environment often finds multiple teams wanting to use this shared staging at the same time.

Contention on Shared Environments

When first attempting to make a more accurate testing system, we end up with a shared environment (more on this in the next section). A shared environment is a natural starting place for better testing: things like production-like data and 3rd party integrations may not even be possible on local, atomized test environments, and it’s impractical to maintain a production-like configuration for every developer

- Typically system/e2e tests are run on some cadence in this shared environment - however when tests fail, it becomes very painful to troubleshoot and find the offending commit (as commits from many teams are co-mingled) - each test failure can take hours to debug and fix. There can be further aggravating factors making this situation worse

- As more teams become dependent on a shared testing environment (often ‘staging’), processes will need to put in places on updates to Staging. The ShareChat team spoke to us recently about how contention over access to a shared staging environment slowed down their dev process.

- With enough process in place, eventually large enough teams will start asking QA to file bugs when something isn’t working on staging. Imagine how much this can impact your velocity. The process to fix staging starts with time spent documenting the problem, and often requires multiple attempts to resolve and verify the fix.

- as these bugs start to surface, the env gets into an unstable state and impacts other teams that want to use this for testing. If you deploy a breaking change to this env, you impact all other teams that rely on staging. They are blocked until your change is reverted or fixed forward. This whole-team blocking is a primary downfall of a testing or staging environment that doesn’t closely resemble reality.

- you’ll often hear about “staging is broken” as a reason for delayed releases, problems with fixes, or even worse: the reason that an update pushed to prod wasn’t tested sufficiently.

In later sections we’ll discuss how to address the contention issue with shared staging envs.

How to address contention on shared staging environments: three common solutions

Lock staging

By preventing commits for a while you prevent collision and incompatibilities during your testing window. There are many slack plugins for this exact task, showing how common the problem is.

Pros

- Rollbacks are easier without the need to cherry-pick

- Keeps everyone aware of big releases, synchronizing things like on-call

- No one can break your team’s commits if they can’t commit at all :galaxybrain:

Cons

- This process quite literally blocks other teams’ progress releasing their code.

- While you prevent direct collisions, you’re still going to be faced with reconciliation issues: the team after you will need to test their changes against this new version of staging, possibly finding problems and prolonging the time that Staging needs to be locked down.

In the end, as you can imagine, this does not scale as your team grows. It’s fine for small teams, especially those that have just grown out of a monolith stage

Clone staging

You can mitigate the contention by cloning staging envs. Often these clones will be large and logical, with who departments given their own clones to work on to avoid needless conflicts and lockouts.

Pros

- No longer fighting over a single staging environment.

- Can test out environment-level changes (e.g. changes to your kubernetes configuration) without breaking staging for everyone.

Cons

- You haven’t prevented collision issues so much as delayed them: if you limit clones to a few, you’ll still have contention over whose ‘turn’ it is to mess with the environment. If you make numerous clones you’ll be struggling to keep them synced.

- At its worst, with many running clones, you’ll end up needing a ‘staging 2’ to test all the changes from each clone and how they run together.

Overall this is an expensive proposition. Moreover, this does not address the problem long term as you’ll still have contention and merge conflicts in these environments.

On-demand ephemeral environments

Several versions of ‘environments on demand’ exist currently. For example creating new envs per PR or for every dev in a Kubernetes namespace/vcluster.

Pros

- Much of the work of replication is offloaded to an operations team, meaning developers can feel less stressed

- It’s possible to dynamically scale down these ephemeral environments, possibly leading to less ongoing resource use

Cons

- The lack of fidelity: even for moderately complex apps, these envs will invariably diverge from production.

- Relatively high spin up time. With the need to copy every possible component on the stack, there’s bound to be a lot of mocking, emulators for cloud resources (e.g localstack) and other workarounds needed, adding to implementation time and cost

The general goal of a more high-fidelity testing environment that doesn't suffer frequent commit collision, is a design goal for every distributed microservice team. These different methods will all work at a particular scale and architecture. However, all three solutions described above have limits to how far they will scale.

A new, scalable, approach

While the three techniques above are used on many large teams, there's one solution that's less well-known and has the potential to improve scalability and the fidelity of testing.

Use request-based isolation to safely share a physical environment

This is the method employed at Signadot, and in use at large companies like Uber and Lyft, to allow large teams with complex architectures to still do high fidelity testing

Pros:

- Cost efficient as isolation is provided without duplicating infrastructure and also mitigates environment sprawl

- Fast spin up as only the modified services are quickly brought up and the rest shared

- Allows for dynamic testing of specific versions of microservices (i.e via branches), e.g. ‘will the new version of the payments service work with my revised checkout service’

- No fighting over Staging, or one of staging’s clones.

Cons:

- Services need to propagate request headers

- You’ll need to run a service mesh or sidecars to dynamically route requests

Summary: High Fidelity test environments are worth the cost

A shared and well-maintained and updated staging environment is a key solution to make sure our tests closely resemble the real world where code will run. In order to prevent surprise failures when committing from Staging to production, it's always worth the effort to enable high fidelity testing. However once we've created our amazing Staging environment, once our team scales up we will deal with contention over who has access to experiment on staging.

There are three well-known, and one a bit more novel, approaches to sharing a staging environment. All will work well at certain scales, and the request-based isolation approach will be well suited for growing engineering teams that are looking to scale testing without incurring a steep rise in infrastructure costs.

Join our 1000+ subscribers for the latest updates from Signadot

-p-1600.avif)